Advent of Code in 3D!

- Date

In my previous post, I detailed how I combined CoreGraphics, AVFoundation, and Metal to quickly view and generate visualizations for Advent of Code. With this new set up, I wondered, could I do the image generation part completely in Metal? I have been following tutorials from Warren Moore (and his Medium page), The Cherno, and LearnOpenGL for a while, so I took this opportunity to test out my new found skills.

If you’d like to follow along, the majority of the code is in the Solution3DContext.swift file of my Advent of Code 2022 repository.

Subtle Differences

When using CoreGraphics, I had a check-in and submit architecture:

- Get a CoreGraphics context with

nextContext() - Draw to this context using CoreGraphics APIs.

- Submit the context with

submit(context:pixelBuffer)

With 3D rendering, you typically generate a scene, tweak settings on the scene, and submit rendering passes to do the work for you.

Before rendering, meshes and textures need preloaded. For this I created the following:

loadMeshprovides a means to load models files from the local bundle.loadBoxMeshcreates a mesh of a box with given dimensions in the x, y, & z directions.loadPlaneMeshcreates a plane with the given dimensions in the x, y, & z direction.loadSphereMeshcreate a sphere with a given radius in the x, y, & z direction.

The renderer uses a rough implementation of Physically Based Rendering. Each mesh is therefore composed of information about base color, metallic, roughness, normals, emissiveness, and ambient occlusion. The methods above exist in two forms: one that takes raw values and one that takes textures.

With the meshes available above, a simplistic node system is used to define objects in the scene. Each node has a transformation matrix and points to a mesh and materials. The materials are copied at initialization, so a mesh can be created with some defaults, but then modified later.

With a scene in place, the process of generating images becomes:

- Modify existing node transformations and materials.

- Use snapshot to render the scene to an offscreen texture and then submit it to our visible renderer and encoding system.

If I wanted to render a scene of spheres of different material types, I can use the following:

try loadSphereMesh(name: "Red Sphere", baseColor: SIMD3<Float>(1.0, 0.0, 0.0), ambientOcclusion: 1.0)

let lightIntensity = SIMD3<Float>(1, 1, 1)

addDirectLight(name: "Light 0", lookAt: SIMD3<Float>(0, 0, 0.0), from: SIMD3<Float>(-10.0, 10.0, 10.0), up: SIMD3<Float>(0, 1, 0), color: lightIntensity)

addDirectLight(name: "Light 1", lookAt: SIMD3<Float>(0, 0, 0.0), from: SIMD3<Float>( 10.0, 10.0, 10.0), up: SIMD3<Float>(0, 1, 0), color: lightIntensity)

addDirectLight(name: "Light 2", lookAt: SIMD3<Float>(0, 0, 0.0), from: SIMD3<Float>(-10.0, -10.0, 10.0), up: SIMD3<Float>(0, 1, 0), color: lightIntensity)

addDirectLight(name: "Light 3", lookAt: SIMD3<Float>(0, 0, 0.0), from: SIMD3<Float>( 10.0, -10.0, 10.0), up: SIMD3<Float>(0, 1, 0), color: lightIntensity)

updateCamera(eye: SIMD3<Float>(0, 0, 5), lookAt: SIMD3<Float>(0, 0, 0), up: SIMD3<Float>(0, 1, 0))

let numberOfRows: Float = 7.0

let numberOfColumns: Float = 7.0

let spacing: Float = 0.6

let scale: Float = 0.4

for row in 0 ..< Int(numberOfRows) {

for column in 0 ..< Int(numberOfColumns) {

let index = (row * 7) + column

let name = "Sphere \(index)"

let metallic = 1.0 - (Float(row) / numberOfRows)

let roughness = min(max(Float(column) / numberOfColumns, 0.05), 1.0)

let translation = SIMD3<Float>(

(spacing * Float(column)) - (spacing * (numberOfColumns - 1.0)) / 2.0,

(spacing * Float(row)) - (spacing * (numberOfRows - 1.0)) / 2.0,

0.0

)

let transform = simd_float4x4(translate: translation) * simd_float4x4(scale: SIMD3<Float>(scale, scale, scale))

addNode(name: name, mesh: "Red Sphere")

updateNode(name: name, transform: transform, metallicFactor: metallic, roughnessFactor: roughness)

}

}

for index in 0 ..< 2000 {

let time = Float(index) / Float(frameRate)

for row in 0 ..< Int(numberOfRows) {

for column in 0 ..< Int(numberOfColumns) {

let index = (row * 7) + column

let name = "Sphere \(index)"

let translation = SIMD3<Float>(

(spacing * Float(column)) - (spacing * (numberOfColumns - 1.0)) / 2.0,

(spacing * Float(row)) - (spacing * (numberOfRows - 1.0)) / 2.0,

0.0

)

let transform = simd_float4x4(rotateAbout: SIMD3<Float>(0, 1, 0), byAngle: sin(time) * 0.8) *

simd_float4x4(translate: translation) *

simd_float4x4(scale: SIMD3<Float>(scale, scale, scale))

updateNode(name: name, transform: transform)

}

}

try snapshot()

}

Or, I can go a bit crazy with raw objects, models, and lights:

Additional Notes

To make the encoding and muxing pipeline work, you must vend a CVPixelBuffer from AVFoundation to later submit it back. Apple provides CVMetalTextureCache as a great mechanism to create a Metal texture that points to the same IOSurface as a pixel buffer, making the rendering target nearly free to create.

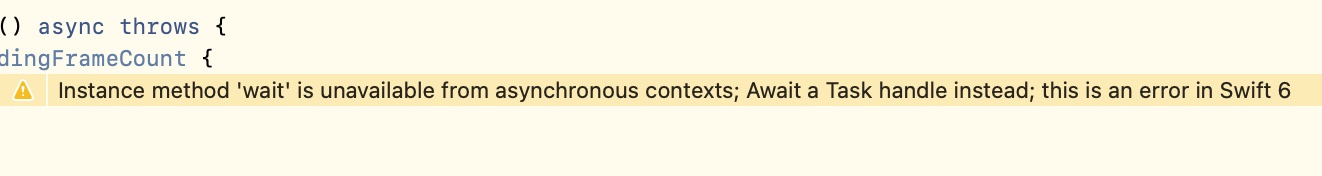

Rendering pipelines tend to use semaphores to ensure that only a specific amount of frames are in-flight and don’t reuse resources that are being modified. This code uses Swift Concurrency, which requires that forward progress must always be made, which goes against a semaphore that may hang indefinitely. Xcode is complaining about this for Swift 6.0, but I’ll cross that bridge once I get there.

Model I/O is both amazing and infuriating. It can universally read models like OBJ and USDZ files, but what you discover is that everyone makes their models a little bit differently. As noted above, each material aspect could come from a texture, or from a float value, or from float vector. Even though you get the translation for free, the interpretation of the results can turn in to a large pile of code.